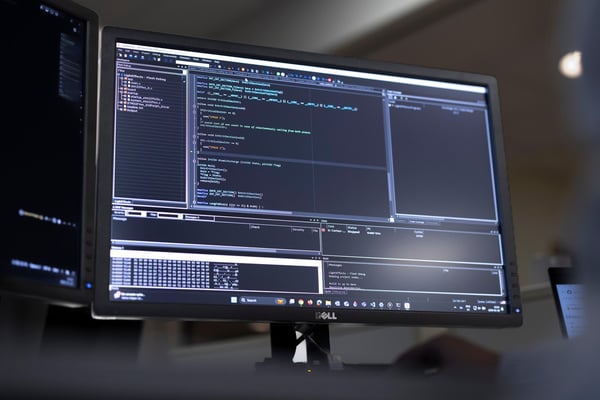

Code quality

Learn more about embedded development and get the most out of our products in our articles, videos och recent webinars.

Move fast and break things? Not so fast in embedded

How to stay focused on your code

Everything ends with code quality

With You all the Way: From Code Quality to Total Security

The basics of C-RUN

Static analysis - Who makes the rules

Safety coding techniques for your application

Everything starts with code quality