Developer efficiency, Programming, Debugging

Reducing RAM footprint to lower BOM cost in embedded systems

- By Shawn Prestridge

- 8 min read

In many embedded projects, RAM is not the resource engineers worry about first. Early prototypes often run comfortably on evaluation boards, test firmware grows organically, and memory usage feels “good enough”.

Then reality hits.

A new feature is added. Diagnostics expand. A security update introduces new buffers. Suddenly, RAM usage is no longer a margin, it is a blocker. And once RAM becomes the limiting factor, the consequences are rarely limited to software.

In embedded systems, running out of RAM often means redesigning hardware, selecting a larger microcontroller, or qualifying a new variant late in the project. That change ripples through the entire organization, procurement, certification, manufacturing, and long-term supply planning. The BOM goes up, schedules slip, and risk increases.

This is why RAM optimization is not just a technical concern, it is a strategic one.

Why RAM optimization matters more than ever

Unlike Flash, RAM does not scale gracefully in low- and mid-range microcontrollers. A small increase in RAM often forces:

- A jump to a higher MCU family

- A different package or pinout

- Additional power consumption

- New qualification and validation effort

For long-life cycle products, sensor terminals, system controllers, and industrial gateways, those changes can be extremely costly.

What makes this challenge even more acute today is that RAM pricing and availability are increasingly influenced by global market forces outside the embedded industry.

A massive surge in demand from AI systems and hyperscale data centers has reshaped the memory market. Training and deploying AI models requires enormous volumes of high-bandwidth memory, pushing manufacturers to prioritize higher-margin products and reallocate production capacity.

As a result:

- Commodity memory has become less attractive to manufacture

- Supply allocation has tightened across the market

- Pricing pressure affects the entire memory ecosystem

It’s important to distinguish how this affects different embedded architectures. Microcontroller-based designs typically rely on-chip RAM, fixed at silicon design time and less directly exposed to external memory markets. MPU-based systems, by contrast, depend on off-chip DDR or LPDDR, competing more directly for the same manufacturing capacity as other commodity memory products.

While embedded systems do not use AI-class memory, they are not isolated from these dynamics. For high-volume products, even modest increases in off-chip memory cost, or reduced availability can significantly impact BOM, sourcing strategy, and long-term product viability. And although MCUs are less directly exposed, sustained pressure on the memory ecosystem still influences pricing, lead times, and architectural decisions over time.

Teams that actively manage RAM usage gain something far more valuable than free bytes: design flexibility. They can absorb feature growth, security updates, and compliance requirements without touching the hardware.

The silent RAM consumers: Globals and statics

One of the most common patterns seen in real projects is the slow accumulation of global and static variables. They start with good intentions: shared state, convenience, faster access. Over time, they become permanent residents in RAM.

Because global and static objects live for the entire lifetime of the system, they:

- Reduce available memory from startup

- Increase initialization overhead

- Hide true runtime memory needs

In practice, moving a variable from global scope into a function often costs nothing in performance, but immediately frees RAM once the function exits.

With IAR linker map files, these long-lived allocations are easy to identify. Instead of guessing where RAM has gone, engineers can see exactly which modules and symbols consume it and decide whether that memory truly needs to be permanent.

Data types: Small decisions, large impact

In many embedded systems, memory waste does not come from one big mistake, it comes from thousands of small ones.

Using int where uint8_t would suffice is a classic example. In isolation, the difference seems trivial. Across arrays, structures, queues, and stacks, it adds up quickly.

uint8_t status;

uint16_t measurement;

Choosing the smallest correct type:

- Reduces RAM footprint

- Improves cache behavior

- Often improves performance and power consumption

This is especially important in sensor-heavy systems, where data structures are replicated many times and inefficiencies scale with product complexity.

Struct layout and bitfields: Hidden padding costs

Even well-written code can waste RAM through structure padding and alignment.

Reordering structure members and using bitfields for flags can significantly reduce memory usage, particularly when structures are instantiated repeatedly or placed in arrays.

typedef struct

{

uint16_t value;

uint8_t state;

uint8_t flags : 3;

} DeviceStatus;

These optimizations are invisible at runtime but highly visible in the linker map file, and ultimately reflected in the BOM.

Keeping constants where they belong: In Flash

A surprisingly common source of RAM waste is constant data that is copied into RAM at startup. Strings, lookup tables, and configuration data, if not handled carefully, can quietly consume precious memory.

By consistently using const, developers ensure that data remains in Flash, where it belongs:

const uint16_t calibrationTable[128] = { /* Flash-resident */ };

With the IAR toolchain, data placement is explicit and predictable, an important advantage when working in regulated or safety-critical environments where memory behavior must be clearly understood and documented.

Dynamic allocation: Flexible, but dangerous

Dynamic memory allocation is attractive because it feels flexible. In embedded systems, that flexibility often comes at a high price:

- Fragmentation over time

- Non-deterministic allocation failures

- Hard-to-debug runtime crashes

For systems that must run continuously for months or years, dynamic allocation is rarely worth the risk.

Many teams replace heap usage with:

- Static buffers

- Fixed-size memory pools

- Application-specific allocators

The result is predictable memory behavior, simpler testing, and improved system reliability, particularly important when RAM margins are tight.

Stack usage: The invisible failure mode

Stack overflows are among the most difficult bugs to diagnose. They may only appear under rare conditions or specific task interleavings caused by scheduling and timing effects.

Large local variables, deep call chains, or recursion can quickly consume stack space, especially in RTOS-based systems.

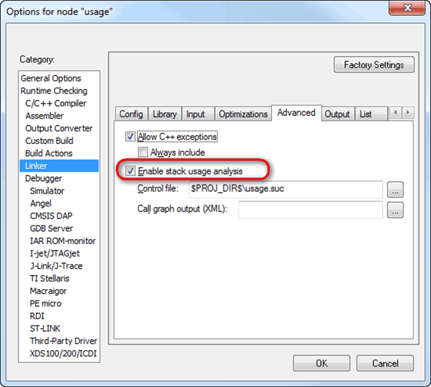

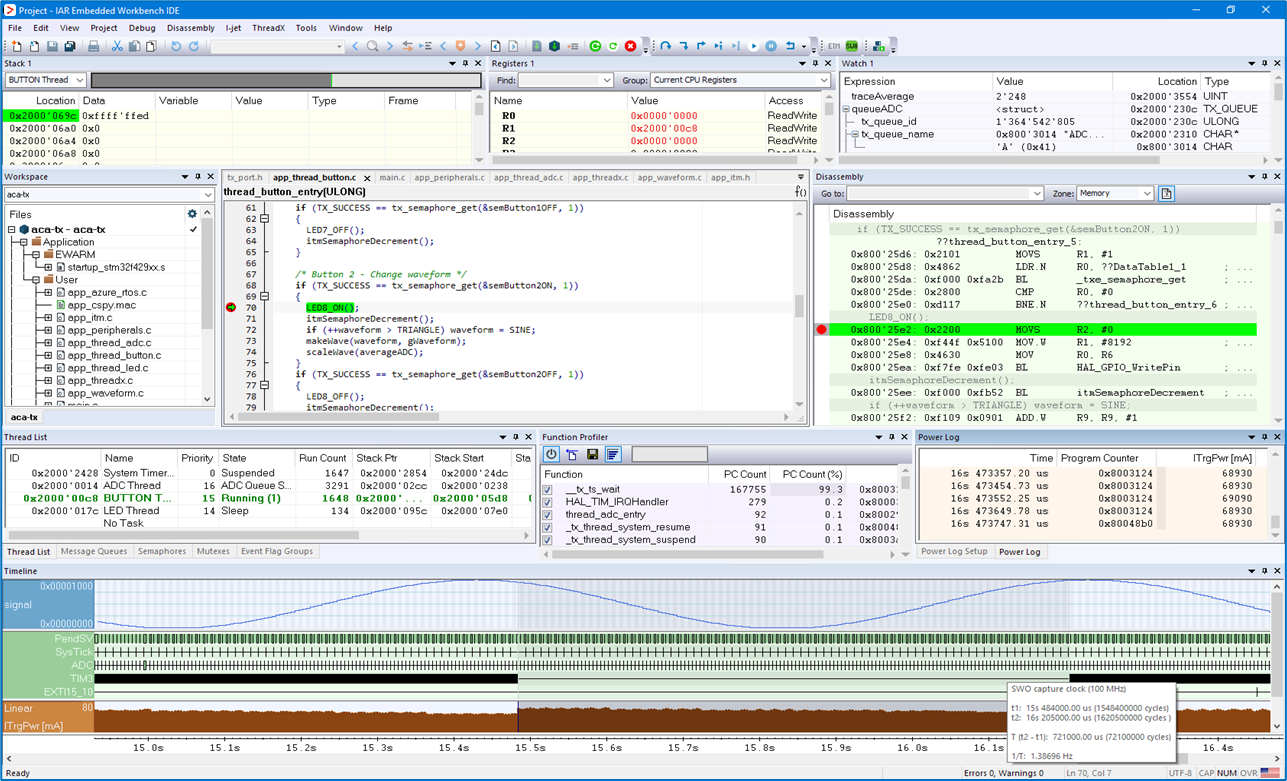

Using IAR’s stack analysis tools, developers can:

- Estimate worst-case stack usage

- Size stacks appropriately

- Avoid late-stage failures that are otherwise hard to reproduce

Compiler and linker optimization: more than code size

Compiler optimization for size (-Os) is often associated with Flash savings, but it also has a direct impact on RAM usage:

- Fewer temporary variables

- Reduced stack pressure

- Less initialized data

Combined with section garbage collection and regular linker map analysis, these techniques prevent memory usage from creeping upward unnoticed as software evolves.

RTOS configuration: Pay only for what you use

RTOS kernels are powerful, but default configurations are often conservative. Excessive stack sizes, unused features, and generic settings can consume significant RAM.

Tuning RTOS configuration files, task by task, often frees enough memory to avoid a hardware upgrade entirely.

From RAM optimization to BOM savings

The real value of RAM optimization is not measured in bytes, it is measured in options.

Teams that actively manage RAM:

- Stay on smaller MCU variants longer

- Avoid late hardware changes

- Reduce supply-chain risk

- Lower BOM cost without sacrificing functionality

With the IAR embedded development platform, RAM usage becomes visible, predictable, and manageable, turning memory optimization from a firefighting exercise into a repeatable engineering practice.

Summary

In embedded systems, every byte of RAM has a cost. Sometimes that cost appears in silicon. Sometimes it shows up in schedules, certifications, or supply chains.

In a world where global memory demand is increasingly shaped by AI and data centers, even “pure” embedded systems feel the pressure.

By treating RAM as a first-class design constraint and using the right tools to manage it embedded teams can build systems that are not only efficient, but also economically resilient.

What's next?

Explore how IAR can optimize your embedded development projects. Try the interactive demo to get an introduction to the IAR platform and explore the advanced capabilities of our solutions.